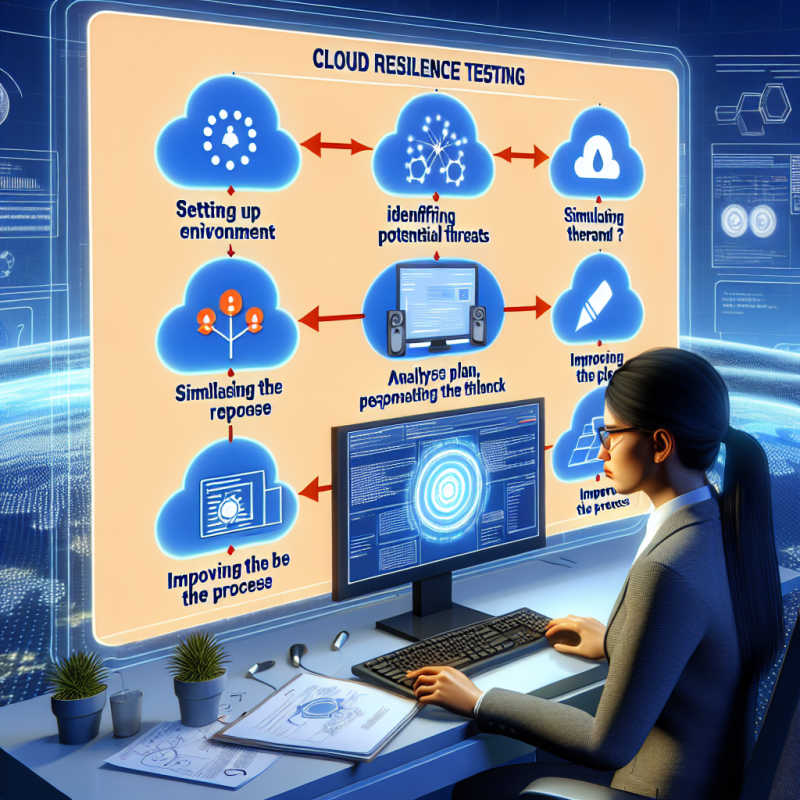

Best Practices for Testing Your Cloud Resiliency Plan

This comprehensive guide delves into the art and science of fortifying your cloud environment against the unexpected, from pinpointing the crucial elements that demand rigorous testing to simulating sophisticated disaster scenarios that put your system to the test. We explore the transformative power of automated testing strategies for perpetual resilience verification and the strategic utilization of cloud-native tools to elevate your testing protocols. Furthermore, we dissect how to interpret test outcomes to fortify your cloud’s defenses and the significance of perpetual updates and re-testing in maintaining an impregnable cloud fortress. Join us as we navigate the best practices for testing your cloud resiliency plan, ensuring your organization remains robust in the face of digital adversity.

Identifying Key Components of Your Cloud Infrastructure for Effective Resiliency Testing

Effective resiliency testing in cloud infrastructure necessitates a strategic approach to identify and prioritize the key components that are critical to your organization’s operational continuity. A comprehensive understanding of your cloud environment’s architecture is essential, focusing on data storage, computing resources, network configurations, and application dependencies. Case studies, such as the one conducted by a leading financial services firm, underscore the importance of segmenting cloud components based on their criticality to business functions. This firm successfully mitigated potential downtime by rigorously testing their most critical components, such as transaction processing systems and customer data repositories, under simulated outage scenarios. This approach not only ensures a more targeted and efficient resiliency testing process but also significantly reduces recovery time objectives (RTOs) and recovery point objectives (RPOs), thereby minimizing potential disruptions to business operations and customer services. Emphasizing the testing of these pivotal elements within your cloud infrastructure will pave the way for a robust and reliable resiliency plan.

Crafting Realistic Disaster Scenarios to Challenge Your Cloud Environment

When it comes to ensuring the robustness of your cloud infrastructure, the creation of realistic disaster scenarios plays a pivotal role. Experts agree that the key to a resilient cloud environment lies not just in theoretical planning but in simulating scenarios that closely mimic potential real-world challenges. This approach allows for the identification of vulnerabilities within your system, enabling you to address them proactively. It’s crucial to incorporate a wide range of scenarios, including but not limited to, cyber-attacks, natural disasters, and system failures, to comprehensively test the resilience of your cloud setup.

According to leading industry professionals, the effectiveness of these simulations hinges on their ability to trigger actionable insights for improvement. This means not only identifying weaknesses but also understanding their impact on your operations and devising strategies to mitigate them. Regularly updating and testing your disaster recovery plans against these scenarios ensures that your cloud environment remains resilient in the face of evolving threats. Furthermore, engaging with cloud resilience experts can provide valuable feedback and recommendations to enhance your strategy, ensuring that your cloud infrastructure can withstand and quickly recover from any disruption.

Implementing Automated Testing Strategies for Continuous Cloud Resilience Verification

Ensuring the continuous operation of cloud-based applications requires a proactive approach to resilience testing. Automated testing strategies stand out as a critical component in this endeavor, allowing teams to simulate a wide range of failure scenarios without manual intervention. Experts agree that the key to successful implementation lies in integrating these tests into the development and deployment pipelines. This integration not only streamlines the process but also ensures that resilience verification becomes an ongoing part of the software lifecycle, rather than a one-time checklist item.

Adopting a multi-layered testing approach is highly recommended by industry leaders. This involves combining unit tests, integration tests, and chaos engineering practices to assess the system’s behavior under various conditions. By leveraging automated tools that can inject failures at different levels – from application to network to infrastructure – organizations can gain valuable insights into potential vulnerabilities. Moreover, automating the analysis of test results enables teams to quickly identify and address issues, significantly reducing the time to recovery in the event of an actual outage. This proactive stance on cloud resilience not only safeguards operations but also enhances customer trust and satisfaction.

Leveraging Cloud Native Tools and Services for Enhanced Resiliency Testing

Exploring the landscape of cloud-native tools and services unveils a plethora of options designed to bolster the resiliency of cloud architectures. Among these, services like AWS Fault Injection Simulator and Azure Chaos Studio stand out for their ability to simulate real-world scenarios that can test the durability and flexibility of cloud deployments. These tools not only automate the process of identifying vulnerabilities but also provide actionable insights to enhance system robustness. By integrating these services into the resiliency testing phase, organizations can achieve a more comprehensive understanding of their system’s behavior under stress, leading to improved disaster recovery strategies.

Comparing the effectiveness of different cloud-native tools in resiliency testing reveals significant differences in capabilities and outcomes.

Tool | Type of Tests | Cloud Provider | Use Case Example |

|---|---|---|---|

AWS Fault Injection Simulator | Fault Injection | AWS | Simulating server outages to test auto-scaling |

Azure Chaos Studio | Chaos Engineering | Azure | Testing network latency and its impact on application performance |

Google Cloud Operations Suite | Monitoring and Logging | Google Cloud | Real-time monitoring to identify and mitigate performance bottlenecks |

This comparison underscores the importance of selecting the right tool that aligns with the specific needs and challenges of a cloud environment. By leveraging cloud-native services tailored to their unique requirements, organizations can significantly enhance their resiliency testing efforts, ensuring their systems are well-prepared to handle unexpected disruptions.

Analyzing Test Results to Identify Weaknesses and Optimize Cloud Resilience

After conducting rigorous tests on your cloud resiliency plan, the next critical step involves a thorough analysis of the results. This process is pivotal in uncovering any vulnerabilities within your cloud infrastructure. A comprehensive review allows teams to pinpoint specific areas that did not perform as expected under simulated disruptions. For instance, a case study involving a major e-commerce platform revealed that during peak traffic times, their cloud services struggled to auto-scale efficiently, leading to significant downtime. By analyzing these test results, the company was able to identify the auto-scaling issue as a critical weakness and subsequently optimized their cloud resilience strategy to handle sudden spikes in demand more effectively.

Moreover, the analysis phase should extend beyond identifying weaknesses, aiming to understand the root cause of each failure. This deeper dive into the test outcomes enables organizations to implement more targeted improvements. A notable example can be seen in the financial sector, where a leading bank experienced latency issues during disaster recovery drills. The detailed analysis revealed that the latency was primarily due to outdated data replication techniques. As a result, the bank invested in modernizing their data replication methods, significantly enhancing their cloud resilience. This approach underscores the importance of not just identifying weaknesses but understanding their underlying causes to make informed decisions.

Finally, leveraging analytics tools and machine learning algorithms can significantly streamline the process of analyzing test results. These technologies offer the ability to quickly sift through vast amounts of data to identify patterns and anomalies that might not be immediately apparent. A healthcare provider utilized machine learning to analyze their test results, which helped them discover that certain data backup processes were not as robust as they believed. With this insight, they were able to focus their efforts on strengthening these areas, thereby enhancing their overall cloud resilience. This example illustrates the power of advanced analytics in optimizing cloud infrastructure, making it an indispensable tool for any organization looking to bolster their cloud resiliency plan.

Maintaining Cloud Resilience: Regular Updates and Re-testing Best Practices

Maintaining an optimal level of cloud resilience requires a proactive approach, focusing on regular updates and comprehensive re-testing of your cloud infrastructure. This strategy ensures that your systems remain robust against evolving threats and operational challenges. By integrating continuous integration and continuous deployment (CI/CD) pipelines, organizations can automate the deployment of updates, reducing the risk of human error and ensuring that resilience measures are consistently applied across all cloud environments.

Comparative analysis of different cloud service providers reveals significant variances in how updates and testing are managed. For instance, AWS offers AWS CloudFormation, which allows users to model and provision AWS and third-party resources predictively. In contrast, Microsoft Azure uses Azure Resource Manager for a similar purpose, with differences in template structure and deployment methodologies. A comparison table highlights these differences, showcasing the importance of selecting a cloud provider that aligns with your organization’s resilience strategy:

Feature | AWS | Azure |

|---|---|---|

Template Language | JSON, YAML | JSON |

Management Console | AWS Management Console | Azure Portal |

Deployment Model | Predictive Deployment | Declarative Deployment |

Adopting a culture of continuous testing and learning is crucial for maintaining cloud resilience. This involves not only regular updates and deployments but also the implementation of chaos engineering practices. By intentionally injecting failures into the cloud environment, organizations can assess the real-world effectiveness of their resilience strategies. This hands-on approach helps in identifying vulnerabilities that might not be evident during standard testing procedures, thereby enhancing the overall robustness of cloud services.

Frequently Asked Questions

What are the most common challenges in maintaining cloud resilience?

The most common challenges include managing complex cloud architectures, ensuring data integrity during disasters, adapting to evolving security threats, and keeping up with the continuous integration and delivery of applications. Additionally, balancing cost without compromising on resilience efforts poses a significant challenge.

How often should I re-test my cloud resilience plan?

It’s recommended to re-test your cloud resilience plan at least twice a year. However, if your cloud environment undergoes significant changes or if there are new potential threats, additional testing should be conducted to ensure your resilience measures are still effective.

Can cloud resilience testing impact my live environment?

Yes, if not carefully managed, resilience testing can impact your live environment. It’s crucial to use isolated environments or schedule tests during low-traffic periods to minimize potential disruptions. Employing cloud-native tools that support testing in isolated instances can also mitigate risks to live environments.

What role does automation play in cloud resilience?

Automation plays a critical role in cloud resilience by enabling continuous testing, monitoring, and recovery processes. It helps in quickly identifying vulnerabilities, deploying updates, and ensuring high availability without manual intervention, thus significantly reducing the risk of downtime.

How can I ensure my data is protected during resilience testing?

To protect your data during resilience testing, ensure that all tests are conducted in a controlled environment using test data. Implement robust access controls and encryption to safeguard your data. Additionally, regularly back up your data to prevent any loss or corruption during testing.

PARTNER & MARKETING LEAD

I have a passion for the multimedia industry. As a multimedia specialist, I excel at producing creative and engaging content across various mediums, including video, motion graphics, graphics, illustrations, and front-end web / application development.